Since following IBM's requirements to license the X86 architecture and products to AMD, Intel and AMD have become the well-deserved giants in the field of processors. Especially in the PC era, these two had almost no competitors, and they have always been leading in chip design.

Although they missed the mobile era, the current server and AI era has given these two semiconductor "veterans" a broad field to play in. To meet the needs of the terminal, they have also come up with their own tricks in chip design and manufacturing.

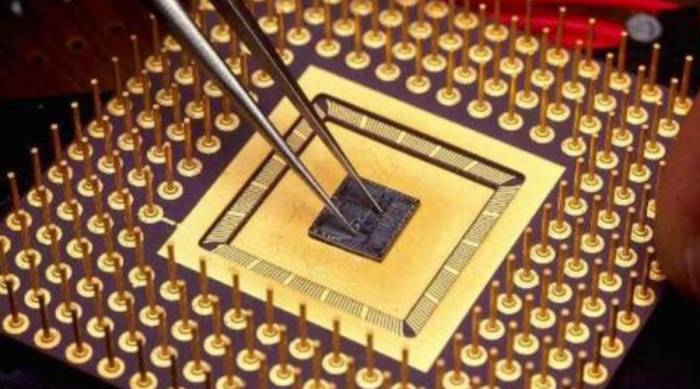

Following the pioneer in this field, AMD, Intel also announced the launch of a Chiplet-based product, Meteor Lake (Figure 1), at the recent Intel Innovation event. It is reported that the structure of Meteor Lake combines five types of tiles: including four types of tiles (CPU/IO/graphics/SoC) and the basic tile located below all these tiles. Starting from this chip, we have officially witnessed Intel's full entry into the Chiplet era.

AMD and Intel have met again on the same battlefield.

Why is Chiplet needed?

Advertisement

Chiplet is a small modular chip that combines to form a complete system on a chip (SoC). They improve performance, reduce power consumption, and increase design flexibility. The concept has existed for decades, as early as May 2007, DARPA also launched the COSMOS project for heterogeneous systems, followed by the CHIPS project for Chiplet modular computing. But recently, Chiplet has attracted attention in addressing the challenges of traditional single-chip IC size reduction. This is a compromise result produced by the contradiction between the current chip manufacturing industry development bottleneck and the terminal's demand for chip performance.

According to Yole, the innovative engine behind Moore's Law has enabled the increasing device integration to continue to adapt to the same physical size. For example, if lithography shrinkage can reduce the building block by 30%, then it is possible to increase the circuit by 42% without increasing the chip size. This is roughly the speed of logic shrinkage over the past few decades.

However, although logic can often be well scaled, not all semiconductor devices enjoy this advantage, such as I/O that can include analog circuits, which scale at about half the speed of logic, and even for the most advanced wafer foundry TSMC, the SRAM cell has hardly transitioned to the 3nm size, which makes people have to look for new ways. In addition, a complete SOC not only needs logic gates but also many different types of device circuits, as well as the minimum level of innovation to maintain market competitiveness. In view of this, designers have begun to choose to design larger overall dies. However, the danger of larger chips lies in the impact on yield, because as the chips become larger and larger, the possibility of containing enough critical defects to cause their malfunction is higher.Moreover, the cost of photolithography scaling is not cheap. Changing the shape and size of transistors only leads to more expensive equipment and longer processing times. Therefore, the cost of wafers processed with a 7nm process is higher than that of wafers processed with a 14nm process, the cost of 5nm process is higher than that of 7nm process, and so on... When we examine this trend in the cost model, we see a clear trend: as the price of wafers rises, the economic attractiveness of the chiplet approach becomes more appealing than the monolithic approach.

According to Yole, each new chip design requires design and engineering resources, and due to the increasing complexity of new nodes, the typical cost of new designs for each new process node also increases. This further motivates the creation of reusable designs. The chiplet design concept makes this possible, as new product configurations can be achieved by simply changing the number and combination of chiplets, rather than initiating a new monolithic design. For example, by integrating a single chiplet into 1, 2, 3, and 4 chip configurations, 4 different processor varieties can be created from a single wafer. If completed entirely through a monolithic approach, it would require 4 separate wafers.

Because of this, the heterogeneous chiplet integration market is growing rapidly. It is estimated that the market value of chiplets is expected to reach $5.7 billion by 2025 and $47.2 billion by 2031. The growing demand for high-performance computing, data analysis, modularity, and customization in electronic design is driving this growth.

In summary, we believe that chiplets have the following four advantages:

1. By dividing functional blocks into chiplets, we can prevent the increase of chip size. This can improve yield and simplify design/verification.

2. The best process can be selected for each chiplet. The logic part can be manufactured using cutting-edge processes, high-capacity SRAM can be manufactured using processes around 7nm, and I/O and peripheral circuits can be manufactured using processes around 12nm or 28nm, thereby reducing design and manufacturing costs. If a process around 28nm is used, even flash memory can be embedded.

3. It is easy to manufacture derivative types, such as the same logic but different peripheral circuits, or the same peripheral circuits but different logic.

4. Chiplets from different manufacturers can be mixed and used, not just limited within a single manufacturer.However, based on the latest information and products released by Intel and AMD, it seems that they have different thoughts on Chiplets.

Intel's Choice

From the chip named "Ponte Vecchio," we can see that Intel has fully utilized the advantages of this small chip.

As shown in the figure, the overall tile area of Ponte Vecchio is slightly smaller than "Sapphire Rapids" (Sapphire Rapids is 400 square millimeters x 4, which is 1,600 square millimeters. Ponte Vecchio is less than 1,300 square millimeters in total), but it actually has 16 tiles. It consists of one computing tile, eight Rambo cache tiles, eight HBM2e I/F tiles, and two Xe-Link tiles (there are also many basic tiles and controllers for HBM, and there are eight HBMs. But let's exclude them from the count).

The basic tile is quite large, but it simply connects the wiring, integrates the HBM controller, etc., and the process is Intel 7. The size of the computing block is less than 100 square millimeters because it uses TSMC N5. Rambo Cache still uses Intel 7, but the HBM2e SerDes uses TSMC N7. By separating the functional blocks, we can improve verification and yield, and now we can use the best process for each block.

Because it uses both EMIB and Foveros packaging connection technologies, it meets the advantages of Chiplets 1 and 2 mentioned above, although it deviates from the UCIe-specified chiplet. In addition to the complete Intel data center GPU Max 1550, the product line also includes a half-size data center GPU Max 1100, which can meet the advantages mentioned in 3 and 4, but considering that this is not a necessary condition at present, Ponte Vecchio can be said to be a "product that correctly uses the chiplet concept."

The problem lies with Xeon Max, or more specifically, Sapphire Rapids (arranged on the far left in Figure 5).

Admittedly, physically it is a chiplet composed of four tiles, but each tile has all functions including CPU cores, memory controllers, PCIe/CXL, UPI, and accelerators, and the tile size is 400 square millimeters. In addition, because we have arranged four tiles in this shape, we must prepare two types of mirror-symmetric tiles, so it does not conform to the advantages 1 to 3 mentioned above.

In the DCAI investor network seminar held in March of this year (2023), a sample of the successor product Emerald Rapids was demonstrated (Figure 6), but this time there is no need to prepare two types of tiles, but the tile size has increased to almost the exposure limit (chip size limit), and the advantages 1, 2, and 3 are still completely ignored.Although this Xeon Scalable is physically a chiplet, its design principle differs from the "advantages of chiplets" written above. However, they appear quite confident. There are mainly two reasons for this.

Reason one: The chiplet has a built-in memory controller. This means that the number of memory channels will vary depending on the number of chiplets. In fact, if you look at the chart in the lower right corner of photo 2, you can find that there are:

3 chiplets: 12-channel DDR5

2 chiplets: 8-channel DDR5

1 chiplet: If it remains as is, it will become a 4-channel DDR5, so please prepare another 8-channel chiplet.

This is what it means. Moreover, if there are 4 or more chiplets, the DDR will be 16 channels or more, making it impossible to maintain compatibility with the platform.

Reason two: The tile size is quite large. According to the information disclosed at Hot Chips, Granite Rapids is equipped with 4MB/ core of L3. This means that the area size per core is much larger than the 1.875MB/core Sapphire Rapids.

The number of cores itself has not been officially announced, but according to the information currently circulating, it seems to be a maximum of 132 cores, which means 44 cores per chiplet. Including the DDR5 memory controller, there are a total of 46 blocks.

I believe the structure of the computing chiplet for the Granite Rapids generation is estimated to be as follows (Figure 1). There are 48 blocks of 12x4, with 44 being CPU and 2 being memory controllers (the remaining two are unknown, but they may actually be redundant blocks of the CPU).The horizontal grid drawn in orange is completed within the Chiplet, but the vertical grid in red connects multiple Chiplets through EMIB. Thus, for a single chiplet, there are 6 grids in the vertical direction and 4 grids in the horizontal direction. However, for two chiplets, there are 6 grids in the vertical direction and 8 grids in the horizontal direction. For three chiplets, there are 6 grids in the vertical direction and 12 grids in the horizontal direction. It becomes a book with 12 grids in the vertical direction and 12 grids in the horizontal direction.

So far, so good. This is a form of small chip, but the question is, how big is this computational block?

Take Sapphire Rapids as an example, a 400 square millimeter tile contains 20 equivalent blocks. In other words, the size of each block is about 4 millimeters by 5 millimeters, which is 20 square millimeters. In reality, the size of each block is smaller, about 13.2 square millimeters, because the PHY and other components are placed around this block.

Now, if we ignore the PHY and assume the size of the block remains unchanged, the size of 48 blocks would be 633.6 square millimeters.

In reality, due to the process change from Intel 7 to Intel 3, we can expect the area to be smaller (Intel announced that the area of the HP Library in Intel 4 will be 0.49 times that of Intel 7). However, the L3 cache has significantly increased from 1.875MB to 4MB, and the process miniaturization is not very effective for this L3 cache (because the spacing of the wiring layers has a greater impact than the size of the transistors. To be honest, there is not much difference between Intel 7 and Intel 4, and it may also be the case for Intel 3), so this is not a big problem, but it is far from half the difference. So, wouldn't it be a good idea to compress 633.6 square millimeters to about 600 square millimeters?

Considering that it will include the PHY for EMIB and the PHY for DDR5, it is expected that although the shape will be longer in the horizontal direction, the area will be about 700 square millimeters, which is not much different from Emerald Rapids. In short, it is too large to be called a chiplet.

Why would Intel choose a solution that seems to have a worse yield? The author believes that this is mainly because Intel feels that the solutions after Sapphire Rapids (including the next Granite Rapids, and even Diamond Rapids after that) have become - "If possible, I want to make a huge monolithic die, but this is physically impossible (line width limit), so I think it means 'split it and then reassemble it'." This is exactly what the internal grid expansion looks like. In other words, they may want to keep everything on a single chip, rather than dividing functions as much as possible.

Of course, this is better in terms of performance. Moreover, Intel's giant chip approach can greatly reduce the communication delay between CPUs and the access speed to the memory controller. As a trade-off, it is expected that the verification work will become more complex, and the yield will decrease due to the larger chip size.

However, AMD has a different idea on Chiplets.AMD's Reflections on Chiplet Evolution

Firstly, let's examine the evolution of AMD's approach to Chiplet technology, which was first realized in the Ryzen processors.

It is understood that the first generation of the Ryzen architecture was relatively straightforward, adopting a SoC (System on Chip) design where everything from the cores to I/O and controllers was located on the same chip. The concept of a CCX (Core Complex) was introduced, where CPU cores were grouped into four-core units and combined using an infinite cache. Two four-core CCXs formed a single chip.

It is worth noting that despite the introduction of the CCX, consumer Ryzen chips were still designed as single-chip. Moreover, although the L3 cache was shared among all cores within the CCX, each core had its own slice. Accessing the Last Level Cache (LLC) from another CCX was relatively slow, and even slower if it was on another CCX. This led to poor performance in latency-sensitive applications such as gaming.

By the time we reached the Zen+ era, the situation remained largely the same (with a node shrink), but Zen 2 was a significant upgrade. It was the first consumer CPU design based on chiplet technology, featuring two Compute Chip or CCD and one I/O chip. AMD added a second CCD on the Ryzen 9 parts, with an unprecedented number of cores in the consumer space.

The 16MB L3 cache was more accessible for all cores on the CCX (read: faster), which greatly improved gaming performance. The I/O chip was separated, and the Infinity Fabric was upgraded. At this point, AMD was a bit slower in gaming, but it provided better content creation performance than its competitor, Intel's Core chips.

Zen 3 further refined the chiplet design by eliminating the CCX and merging eight cores and 32MB of cache into a unified CCD. This greatly reduced cache latency and simplified the memory subsystem. For the first time, AMD's Ryzen processors offered better gaming performance than their main competitor, Intel.

Zen 4 did not make significant changes to the CCD design, aside from a node shrink of the CCD design.Arriving at the Epyc series processors. Data shows that in the first generation of AMD EPYC processors, Intel is based on four replicated small chips. Each processor has 8 "Zen" CPU cores, 2 DDR4 memory channels, and 32 PCIe channels to meet performance goals. AMD had to provide some additional space for the Infinity Fabric interconnect between the four small chips.

According to relevant estimates, at the 14-nanometer process, the chip area of each small chip is 213 square millimeters, and the total chip area is 4213 square millimeters = 852 square millimeters. Compared with the assumed single 32-core chip, this means an approximate 10% chip area overhead. Based on AMD's internal yield modeling using historical defect density data based on mature process technology, it is estimated that the final cost of the four small chip design is only about 0.59 of the single chip method, despite the total silicon consumption being about 10% more. In addition to reducing costs, they were also able to reuse the same method in the product, including using them to build 16-core parts, doubling the DDR4 channels, and providing 128 PCIe channels.

But all of this is not free. When the small chips communicate through the Infinity Fabric, there is a delay, and the number of DDR4 memory channels on the same small chip is not matched, so some memory requests must be handled carefully. Therefore, in the second generation of AMD EPYC processors (ROME), AMD adopted a dual-chip method.

It is understood that the first chip of the second generation of AMD EPYC is called the I/O die (IOD), which is implemented in a mature and economical 12nm process, including 8 DDR4 memory channels, 128 PCIe gen4 I/O channels, and other I/O (such as USB and SATA, SoC data structure, and other system-level functions). The second small chip is the composite core die (CCD), which is implemented on the 7nm node. In the actual product, AMD assembles one IOD with up to 8 CCDs. Each CCD provides 8 Zen 2 CPU cores, so this arrangement can provide 64 cores in one slot.

In the third generation of Epyc processors (Milan), AMD offers up to 64 cores and 128 threads, using AMD's latest Zen 3 cores. The processor is designed with eight small chips, each with eight cores, similar to Roma, but this time all eight cores in the small chip are connected, achieving an effective dual L3 cache design for a lower overall cache delay structure. All processors will be equipped with 128 PCIe 4.0 channels, 8 memory channels, most models support dual processor connections, and provide new options for channel memory optimization. All Milan processors should be directly compatible with the Rome series platform through firmware updates.

In the fourth generation of Epyc processors, AMD uses a small chip design with up to 12 5-nanometer complex core chips (CCD) in its Chiplet architecture, where the I/O chip uses 6nm process technology, and the surrounding CCDs use 5nm process technology. Each chip has 32MB of L3 cache and 1MB of L2 cache. Because AMD's Epyc design is a fully integrated small chip, also known as a system on a chip. This means they integrate all core components (such as memory and SATA controllers) into the processor, and there is no longer a need for a powerful chipset on the motherboard, which reduces costs and improves efficiency.

Sam Naffziger, AMD's product technology architect, also said in a paper that AMD is one of the first companies to commercialize silicon interposer technology, which gives it more advantages in product design. Earlier, in an interview with IEEE, he said, "One of the goals of our architecture is to make it completely transparent to software, because software is difficult to change. For example, our second-generation EPYC CPU is composed of a centralized I/O chip surrounded by computing chips. When we use a centralized I/O chip, it reduces memory latency and eliminates the software challenges of the first generation."

In terms of memory controllers, unlike the Intel approach mentioned above, AMD moved the memory controller to the IOD, and the CCD only has CPU cores and L3 cache, so there are various CCDs such as 4/8/12, but both products can use 12-channel DDR5.In the EPYC series, AMD has consistently utilized the Infinity Fabric to connect the CCD and memory controller, which not only enhances flexibility but also reduces costs. However, the performance penalty due to increased latency caused by the use of Infinity Fabric is something that AMD cannot escape either. Despite this, AMD has managed to minimize the impact through efforts such as using large capacity L3 cache, which does not seem to be an option for Intel.

As Sam Naffziger stated, AMD is looking for ways to scale logic, but SRAM is more challenging, and analog things are absolutely not scalable. Therefore, AMD has taken steps to separate analog from the central I/O chiplet. With the help of 3D V-Cache (a high-density cache chiplet that is 3D integrated with the compute chip), AMD has separated out the SRAM. Looking ahead, the company may see more similar operations.

Finally, we reiterate that the Chiplet strategies of these two companies are based on the analysis of the products currently seen and do not represent their final strategies. However, from these analyses, we can undoubtedly bring more thoughts to the design of Chiplets.

Post a comment