"The definition of 'Moore's Law' almost refers to anything related to the semiconductor industry, and when plotted on semi-log paper, these laws approximate a straight line." —— Gordon Moore.

In this article, we explore the history and current status of Moore's Law. It is not a detailed exploration of the history of Moore's Law or the technical basis for the future development of semiconductors. Instead, it attempts to provide a high-level overview of Moore's Law and its development. In this exploration, I am delighted to draw on Gordon Moore's own views in several aspects.

Any discussion about the future of semiconductors is likely to start with Moore's Law. The recent article "Not quite dead yet" in The Economist still follows this pattern, in which they wrote: "Two years shy of its 60th birthday, Moore's Law has become a bit like Schrödinger's hypothetical cat - at once dead and alive."

In recent years, Moore's predictions have dominated popular discussions on the subject. This Google Ngram in its article describes how "Moore's Law" has appeared in currently published books over the past decade, almost as frequently as the "integrated circuits" described by the law.

Advertisement

Perhaps we should not be surprised. As personal computers, the internet, and smartphones have changed our lives and society, "Moore's Law" has taken on cultural significance, reflecting the importance of these changes.

However, many discussions about Moore's Law have been imprecise. Some critics have forgotten or chosen not to discuss the true meaning of Moore's Law. For example, the recently published article in The Economist mentioned some important and interesting new technologies, but it is worth noting that, considering the name of the newspaper, it ignored any discussion of the economics at the core of the "law."

This lack of precision may be the root of the divergence mentioned by The Economist. Is Moore's Law still applicable or has it ended? Let's listen to the views of two industry leaders:

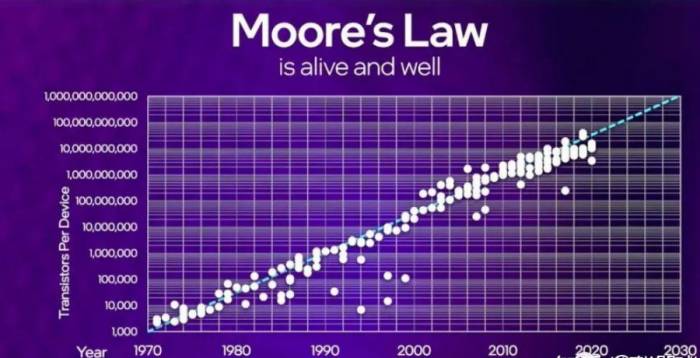

NVIDIA CEO Huang Renxun said in September 2022 - Moore's Law is dead;Intel CEO Pat Gelsinger has stated in the same period that Moore's Law is "alive and well";

This is not the first time that Moore's Law has been declared dead, or at least close to the end of its life. It is a further evolution of the "end of Moore's Law," which has obviously been a hot topic of discussion since the late 1990s.

Intel's Mark Bohr said: "The end of Moore's Law is always 10 years away. Yes, it's still 10 years away."

The uncertainty of the future of Moore's Law is inevitable. If there are foreseeable technical obstacles, then questioning whether the law will continue to be implemented is predictable. But as we know, the semiconductor industry has broken through these technical obstacles time and time again.

In this article, we will re-examine Moore's original predictions and revised predictions. We will try to understand the true meaning of Moore's Law. Today we will look at various statements about Moore's predictions.

Before Moore's Law

Gordon Moore was not the first person to predict a significant increase in the number of transistors on a single integrated circuit. At the IEEE meeting held in New York in 1964, Harry Knowles of Westinghouse predicted: "By 1974, we will have 250,000 logic gates on a single wafer."

Moore was in the audience at the time, and he later recalled that he thought Knowles's prediction was "ridiculous." The company he worked for, Fairchild, was struggling to install "a few" multiple logic gates on an inch wafer. Other speakers at the conference also held more conservative views on the development of semiconductor technology, which contradicted Knowles, and Knowles's prediction was considered "crazy" by others.

Moore's Law (1965 version)However, Moore soon found that Knowles' prediction was not as crazy as he had imagined. In 1965, when he was asked to write an article about the future of the semiconductor industry for "Electronics" magazine, he looked at the data on achievements that had been made:

I saw that the minimum cost per component point had been coming down quickly over several years, as the manufacturing technology improved. From this observation, I took my few data points and plotted a curve, extrapolating out for the ten years I had been asked to predict.

Moore later described his method in more detail:

"Adding points for integrated circuits starting with the early 'Micrologic' chips introduced by Fairchild, I had points up to the 50-60 component circuit plotted for 1965... On a semi-log plot these points fell close to a straight line that doubled the complexity every year up until 1965. To make my prediction, I just extrapolated this line another decade in time and predicted a thousand-fold increase in the number of components in the most complex circuits available commercially.

The essence of this inference would form the core of "Moore's Law":

"With unit cost falling as the number of components per circuit rises, by 1975 economics may dictate squeezing as many as 65,000 components on a single silicon chip.

This was not exactly the same as the prediction of Knowles from Westinghouse Electric, but it was still "crazy."

Moore later said that he did not expect the prediction to be followed so precisely:

"I was just trying to get across the idea this was a technology that had a future and that it could be expected to contribute quite a bit in the long run."If we adopt the numbers cited by Moore, we have 50-60 components in 1965, increasing to 65,000 in 1975, a roughly 1,000-fold increase within a decade. This corresponds to a doubling of the number of components each year over the decade.

Moore's Law (1975 Edition)

A friend of Moore, Carver Mead from the California Institute of Technology, quickly dubbed this prediction "Moore's Law." In 1975, Moore revisited "Moore's Law." Looking at his latest data, he proposed a revised forecast:

"By the end of the decade, the new slope might approximate a doubling every two years, rather than every year." (Original text: The new slope might approximate a doubling every two years, rather than every year, by the end of the decade.)

Moore then used this new slope to infer another ten years, that is, 1985.

There is also a version of this "1975 Law" that involves doubling (computer performance) every 18 months, which Moore attributed to Dave House of Intel:

Now the progress is doubling every 18 months...

I think it was Dave House, who used to work here at Intel, who did that. He decided that the complexity was doubling every two years and the transistors were getting faster, that computer performance was going to double every 18 months... but that's what got on Intel's Website... and everything else. I never said 18 months, that's the way it often gets quoted. (Original text: I think it was Dave House, who used to work here at Intel, did that, he decided that the complexity was doubling every two years and the transistors were getting faster, that computer performance was going to double every 18 months... but that's what got on Intel's Website... and everything else. I never said 18 months, that's the way it often gets quoted.)

For the rest of this article, we will adhere to Moore's own predictions.

The Practice of Moore's LawSo, how did Moore's predictions turn out?

In 1995, Gordon Moore revisited his prediction in an article titled "Lithography and the Future of Moore's Law." He drew a chart showing that although his predictions were not precisely fulfilled, the rate of change was tracked quite accurately.

On the 40th anniversary of his initial prediction, Moore re-examined his forecast once again. He found, once more, that his revised predictions worked out well.

For the latest update as of 2020, we can visit the "Our World in Data" website, which displays the historical trend of the number of transistors on CPUs:

In 1965, Gordon Moore predicted that this growth would continue for at least another 10 years. Was he right?

In the chart, we visually see the increase in transistor density (the number of transistors on an integrated circuit) since 1970.

It looks strikingly similar to the simple chart Moore drew in 1965. Note again that the number of transistors is on a logarithmic scale, so a linear relationship over time means that the growth rate is constant.

This means that the number of transistors has actually been growing exponentially.It compares the number of transistors in CPUs and GPUs from 1969 to 2019 with Moore's prediction (revised in 1975).

Moore later commented:

We cannot predict what will happen in the future. It was just a lucky guess, I think, as far as I am concerned... a fortunate inference.

So Moore's prediction turned out to be a "lucky guess"!

How was this progress achieved?

To gain a deeper understanding of how Moore's prediction was realized in practice, it is worth considering how this progress was achieved. Moore studied this in 1975:

He divided the improvements into three parts (see the figure above for Moore's historical analysis and short-term inference since 1975):

Reducing component size;Increasing the size of semiconductor chips;

He refers to the contribution of "Device and Circuit Cleverness."

Perhaps surprisingly, the contribution to growth is less due to "scaling down" and more due to what Moore calls "Device and Circuit Cleverness." What is the last item?

I believe this factor is squeezing wasted space in the chip, eliminating isolation structures, and various other things.

He also found that the contribution of "increasing chip size" is close to the contribution of smaller components. In 1975, the size of the Intel 8080 microprocessor chip was 20mm². Today, the Apple M1 Max chip size is 425mm². The growth factor of 20 is less than the factor Moore inferred, but it still means that it has made a significant contribution to the progress of this law over the past few decades.

Moore is not only concerned with the growth of chip size. He also considered increasing the size of the wafer, and when Moore wrote this retrospective article, the wafer size had increased from three-quarters of an inch to 300 millimeters. This is not quite in line with the 57-inch wafer size implied by one of his inferences. However, Intel did provide an explanation of what this might mean in practice.

Moore's Law: Myth and Reality

Before we continue, we should first address some popular myths about Moore's Law and emphasize some key points. Moore's Law:

1. It is not a "natural law":This is actually unrelated to the underlying physical or chemical properties of the devices; of course, the underlying physics and chemistry ultimately limit how small components can become.

2. Do not predict an exponential growth in computer performance.

We have already seen that Moore did not predict performance doubling every 18 months. More components on a chip can lead to performance improvements, but this relationship is complex, and the end of Dennard scaling around 2006 means that even if Moore's Law continues to exist, the rate of performance growth has slowed down.

3. It's not just about shrinking the size of components.

See further discussion in the context above and below.

4. Whether to predict the changes in the number of components on an integrated circuit in terms of the best unit economy (i.e., the lowest cost per component)

(1) It does not describe the progress of the maximum possible number of components on an integrated circuit;

(2) We can illustrate this with the graph from Moore's original 1965 paper, which contains more components but has worse unit economy. Moore's prediction was about the minimum of these curves;

It is crucial that the cost per component decreases exponentially. If the cost per component does not decrease in this way, then the cost of integrated circuits with an exponentially increasing number of components will increase exponentially.By the way, why does the manufacturing cost chart for each component look like this? A clue is provided in a paper published by Harry Knowles of Westinghouse before Moore's first article. It is the product of the "yield curve" and the "100% yield cost curve per component."

Finally, back to Moore's Law:

It has been used to create a timetable around which the semiconductor industry can self-organize.

Moore's Law ultimately became a self-fulfilling prophecy, partly because companies organized themselves in a way that aligned with Moore's predictions. So, perhaps planning is more than luck!

Moore's Law as Economics

If Moore's Law is not a natural law, then what exactly is it? We already have clues! Let's revisit Gordon Moore's statement. He said: "Moore's Law is really about economics. My prediction was about the future direction of the semiconductor industry, and I have found that the industry is best understood through some of its underlying economics." (Original text: Moore's law is really about economics. My prediction was about the future direction of the semiconductor industry, and I have found that the industry is best understood through some of its underlying economics.)

Simply saying "it's about economics" does not really help us understand what is going on. What surprised me is that, in researching this article, there seem to be very few articles about the economics behind Moore's Law. Perhaps this is due to the complexity of the subject and the fact that it is at the intersection of two professions. Moore's Law is the ultimate result of a series of highly complex interactions between the semiconductor manufacturing economy and the underlying technology.

To eliminate this complexity, I think one (very simple) way to think about Moore's Law is as a statement of a virtuous cycle:

Creating more complex devices... leads to...The market for these devices is larger... which in turn stimulates...

Investment in research and development and more complex manufacturing... which in turn leads to...

The creation of more complex devices...

...and so the cycle continues.

Moore has seen that the pace of technological innovation at the companies he has worked for (first Fairchild Semiconductor, then Intel) is feasible. And what is possible, partly depends on the level of investment that companies can afford.

As mentioned above, this cycle is, of course, a simplification of the actual situation. It ignores the competition among semiconductor manufacturers, which, in fact, would be the main factor affecting their development of more advanced devices. However, I find it interesting that, according to the above model, competition among companies is not a prerequisite for maintaining this virtuous cycle.

Another simplification of this model is that participants can look beyond a two-year cycle, predict future improvements, and prepare for the needs of later cycles.

This is one of the wonders of Moore's Law. By setting a timetable for these developments, companies can collectively organize to achieve these advancements.

I have reason to believe that this is one of the reasons that prompted Moore to pose the question in this way. By outlining the pace of improvements he thought could be anticipated, he prompted suppliers and customers to prepare for these improvements.

The actual pace of these improvements is also important. Moore used his observations and experience to set a pace of improvement that he believed could be sustained. If he was wrong, it could potentially disrupt the virtuous cycle:Moving too fast can lead to technology outpacing itself, potentially resulting in the inability to manufacture the more complex devices that are needed; moving too slow will not be sufficient to stimulate the demand necessary to sustain the investments required to manufacture these devices. By maintaining a controlled but meaningful pace of progress, this momentum can be sustained. A side benefit of making this pace of improvement public is that while companies might try to accelerate to gain a competitive advantage, the ecosystem developing at a consistent pace will limit them.

The End of Moore's Law

Moore made his last set of predictions in 2005: "It is amazing what a group of dedicated scientists and engineers can do, as the past forty years have shown. I do not see an end in sight, with the caveat that I can only see a decade or so ahead." (Original text: It is amazing what a group of dedicated scientists and engineers can do, as the past forty years have shown. I do not see an end in sight, with the caveat that I can only see a decade or so ahead.)

We have now passed the "decade or so" that Moore thought he could foresee. Can we now talk more about when "Moore's Law" will end?

The first thing to point out is that an exponential law like Moore's Law is inevitably going to end at some point. The number of components on an integrated circuit cannot continue to double "forever."

Then, if we go back to the virtuous cycle, we find that this cycle may be broken because it fails to do the following:

1. Create more complex devices, or2. Create/expand the market for these devices, or

3. Stimulate investment in R&D and advanced manufacturing

Let's take a look at these potential "barriers" one by one.

Barrier One: Physical Limitations and Roadmaps

The Economist article we mentioned at the beginning of this article focused on some of the technical obstacles encountered in the process of creating more complex devices. It highlighted some measures attempting to bypass these obstacles, ranging from "almost ready for production" to "somewhat speculative," including:

1. Transitioning from "finFET" to "nanosheets";

2. Backside power supply;

3. Alternatives to silicon, including "transition metal dichalcogenides";

All of these, in one way or another, are means to an end: further miniaturization of components.

As we have pointed out, Moore's Law has been used to create a timetable for the semiconductor industry to self-organize. At this point, we can refer to the current schedule stipulated in the "International Roadmap for Devices and Systems" (IRDS).The executive summary of the 2023 roadmap can be downloaded for free. It is an engaging and not too lengthy 64-page read that provides a wealth of detailed processes on the possible developments in lithography, materials science, metrology, and other key aspects of chip manufacturing.

We do not intend to summarize the content of the report here. Instead, we will focus on one aspect of the manufacturing process that could potentially end Moore's Law.

Although the "headline" of Moore's Law does not directly specify smaller components, as we have seen, creating smaller components through the so-called "node scaling" is key to achieving an exponential increase in components per chip in practice.

At this point, we need to clarify a more common misconception. Perhaps the most unhelpful contribution to the public's understanding of Moore's Law is the naming of the "process node." In fact, the "node size description" with physical length labels, such as 5nm, 3nm, 18A, etc., has nothing to do with the actual size of the components. However, it is not surprising that there is a widespread belief that we have reached the fundamental limit due to the size of the components approaching the atomic scale. As Samuel K. Moore said in an article titled "It's time to throw out the old Moore's Law metric" published earlier in IEEE Spectrum:

"After all, 1 nm is less than the width of five silicon atoms. So you might think that Moore's Law will soon disappear, and the progress in semiconductor manufacturing will not make further leaps in processing power, and solid-state device engineering is a dead-end career path. But you are wrong. The picture depicted by the semiconductor technology node system is wrong. Most of the key characteristics of 7 nm transistors are actually much larger than 7 nm, and the disconnection between the term and physical reality has lasted for about twenty years."

Samuel K. Moore also gave an example to illustrate what this means in practice:

"The chairman of the IEEE International Roadmap for Devices and Systems (IRDS), Gargini, proposed in April that the industry 'return to reality' and adopt a three-number measurement that combines contact gate pitch (G), metal pitch (M), and the number of device layers on the chip (T) is crucial for future chips."

"All three parameters are the complete information needed to assess the transistor density," said ITRS director Gargini.

The IRDS roadmap shows that a 5 nm chip has a contact gate pitch of 48 nm, a metal pitch of 36 nm, and a single layer, i.e., the metric G48M36T1. It is not exactly what is said verbally, but it does convey more useful information than "5-nanometer node."

Therefore, these components are actually much larger than the node name implies.Despite this, these components still become very small! Ultimately reaching the limit caused by the limitations of EUV lithography technology.

Of course, we have seen such limitations before. EUV was able to break through the limitations of DUV before, but at the cost of —— cost.

Barrier two: Node reduction leads to rising costs

This cost brings us to the second potential obstacle, which is the need to create or expand the market for more complex integrated circuits. However, it is important to note first. What inevitably follows is an extremely simplified discussion of some aspects of the basic economics of manufacturing chips.

It is worth noting that not only is the number of components on an integrated circuit growing exponentially (in line with the law), but the price of these integrated circuits is still affordable, which in turn means that the cost per component is also decreasing exponentially. Despite the rising costs of semiconductor factories, this is still the case.

Gordon Moore articulated what later became known as "Moore's Second Law" or "Rock's Law" (named after Arthur Rock, who helped finance Intel and served as chairman of the company for many years), which states that "the cost of manufacturing semiconductor chips doubles every four years."

Moore himself was keenly aware of the increasing cost of lithography tools. This is the chart from his 1995 paper:

And here is the "stepper" price chart drawn by the U.S. trade organization Sematech at the beginning of this century.

The cost of "cutting-edge" lithography tools continues to rise rapidly. ASML has just delivered its first "high numerical aperture" EUV system to Intel, reportedly priced at $275 million.

Only if the companies using the equipment can increase sales can the equipment prices continue to rise over a longer period. And they have indeed done so. This is TSMC's revenue over the past twenty years.

Higher Costs and Higher Utility

But what if this growth ends? Let's consider what might happen if higher costs from more expensive lithography tools or other reasons, combined with static demand, eventually lead to higher prices.

Does it make economic sense to have higher prices? Only if users derive corresponding value from these more expensive chips. We can easily identify examples of such sources of value:

1. Lower power consumption: Reducing the cost of the entire lifecycle of integrated circuits, or extending the battery life of portable devices.

2. Higher utility: The ability to integrate more functions and performance into a single integrated circuit.

But at some point, the utility of smaller nodes is not sufficient to justify the higher costs. Even if these smaller nodes continue to have the best unit cost benefits according to Moore's Law, they may still mean "per chip" costs so high that they cannot be justified.

For example, Apple may currently be willing to pay a higher price for TSMC's latest wafers, which will be used in the most expensive iPhones. But if prices continue to rise, this situation cannot continue indefinitely. There is ultimately a limit to the price consumers are willing to pay for high-end smartphones.Then we need to remember that an increase in investment requires an increase in demand. Higher wafer costs inevitably reduce demand, thereby breaking the virtuous cycle of increasing demand and investment that has driven Moore's Law for decades.

Perhaps there will be new sources of demand for the most advanced semiconductors, which will help maintain investment and reduce unit costs in the long run. Perhaps new applications from machine learning? We will have to wait and see.

Ultimately, even if the nodes continue to shrink, the rising cost of chips means that the economics of the "virtuous cycle" will collapse without additional demand.

Investment and the "Chip Race"

Even if the economics of manufacturing more advanced nodes no longer make sense, then politics, especially geopolitics, may come into play. Here are some recent headlines:

1. The United States shows strong interest in financing $52 billion for semiconductor chips (August 2023)

2. Brussels approves €8 billion in new subsidies for European semiconductor manufacturing (June 2023)

3. Japan prepares $13 billion to support the country's chip industry (November 2023)

4. Facing U.S. restrictions, China is ready to provide a huge package for its chip companies (December 2022)

Currently, we are in a "chip race," with countries competing to invest funds to create new "wafer fabs." What these countries really want is "cutting-edge" manufacturing technology.So, perhaps the government could maintain this virtuous cycle for a while by funding the investments needed for research and development and advanced manufacturing. I say "perhaps" because it is not certain whether the cash in these headlines would be well spent and would promote the development of the most advanced technologies if it were actually spent.

Moreover, at some point, even the government will run out of cash, realize that it cannot compete, or see no value in further investment.

Moore himself was keenly aware that the exponential growth of demand could not continue indefinitely. Here is a chart from Moore's 1995 paper, which compares the "global gross product" with the semiconductor industry:

Moore commented on this chart as follows:

"As you can see, in 1986, the semiconductor industry accounted for about 0.1% of the GWP. Just ten years later, around 2005, if we maintain the same growth trend, we will reach 1%; around 2025, we will reach 10%. By the middle of this century, we will achieve everything. Obviously, the industry growth must slow down.

I don't know how much GWP we can achieve, but more than one percent would definitely surprise me. I think the information industry will obviously become the largest industry in the world during this period, but past large industries, such as automobiles, have not reached one percent of the GWP. Our industry growth must slow down relatively quickly. We have an inherent conflict here. Costs increase exponentially, while revenue cannot grow at the corresponding rate for a long time. I think this is at least as big a problem as the technical challenge of reaching ten microns."

According to my estimates, the total revenue of chip manufacturers in 2023 is about a quarter of the global GDP, so Moore's deviation in this case is more than an order of magnitude. But his basic point still stands. This relationship will eventually limit the scale of the industry's development.

System Integration

Let's consider the last factor. We will return to another observation in Moore's 1965 paper:As stated in the IEEE article cited above—Gargini said: "By around 2029, we will reach the limits of lithography technology. After that, the way forward is stacking... this is the only method to increase our density."

From the Executive Summary of the International Roadmap for Devices and Systems:

"As a means of increasing IC transistor density, feature scaling will continue to grow unabated for the next 10 years and beyond. However, transistor channel length scaling is no longer a 'must-do' to meet performance requirements, as the maximum operating frequency is limited to 5-6 GHz due to dynamic power constraints. Multi-layer NAND memory cells are being produced steadily, nanosheet transistors will follow FinFET transistors, and then stacked NMOS/PMOS transistors. Various 2.5D and 3D structural approaches will increase the component density and integration of many homogeneous and heterogeneous technologies in new revolutionary systems."

Building systems with smaller components does not only mean achieving "vertical" by "stacking" components. It also includes "chiplets" with smaller chips connected side by side.

At Hot Chips 2019, TSMC's Philip Wong gave a talk titled "What will the next node offer us?" which began with this slide:

Then, the talk spent more than half the time discussing "system integration" or creating larger systems with smaller functions, and this slide succinctly summarized this point:

Thus, nearly six years after Moore's initial paper, it has once again proven that he was prescient.Moore's Law (2023 Edition)

Let's revisit the debate on the current status of Moore's Law.

If you have been closely following the debate on Moore's Law, you will find that the aforementioned quote by Pat Gelsinger does not represent his most recent stance on the subject. Just a few months after presenting this slide at the Intel Innovate 2023 conference...

(Please note the emphasized "every 2 years, twice.")

...Gelsinger revised his position (my emphasis):

"Moore's Law, where essentially you were just able to shrink in the X, Y direction and being able to do .7x shrinks in the X and the Y, you're able to get this doubling every two years approximately, and that was like the Golden Era of Moore's Law. You know we're no longer in the Golden Era of Moore's Law, it's much much harder now, so we're probably doubling effectively closer to every 3 years now, so we've definitely seen a slowing." (Original text: Moore's law where essentially you were just able to shrink in the X, Y right and being able to do .7x shrinks in the X and the Y right you're able to get this doubling every two years approximately and that was like the Golden Era of Moore's law. You know we're no longer in the Golden Era of Moore's law, it's much much harder now so we're probably doubling effectively you know closer to every 3 years now so we've definitely seen a slowing.)

Thus, today Gelsinger does not really believe that the 1975 version of Moore's Law still exists.

It turns out that the answer to the riddle of whether Moore's Law is dead or alive is that when we open the box, we find that Schrödinger's unfortunate cat was actually dead. However, a close relative of it is still alive.

To be fair, Gelsinger has long had a certain degree of imprecision in the meaning of Moore's Law. Gordon Moore himself said in 1995:The definition of "Moore's Law" has become almost synonymous with anything related to the semiconductor industry that, when plotted on semi-log paper, approximates a straight line. I hesitate to delve into its origins and thereby constrict its definition.

Let's attempt a summary:

Node scaling will continue for a while, but at a slower pace and with higher costs. However, Moore's Law is not just about shrinking components. There have been and will continue to be other ways to "pack more components into integrated circuits," including Moore's concepts of "device intelligence" and "achieving large systems with smaller functions," which will continue to help extend Moore's Law (revised) for some time.

Ultimately, it is likely that economics, rather than physics, that will bring an end to Moore's Law.

Post a comment